Results from a case study and business outcome from the test automation

One of the teams that I have coached had an outstanding journey in the last few years.

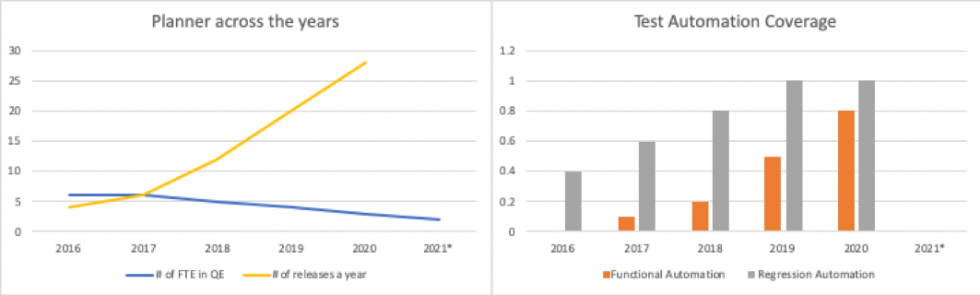

The salient parts of this product team (Planner) is that they have been consistent in having investment towards regression and functional automation, creating opportunities to increase the number of releases per year and having an empowered set of team members towards testing and automation.

The Planner team didn’t start out this way. They had only 4 releases per year and each release led to several rounds of regression testing to ensure that there are no backward compatibility issues. What really made the change?

The Planner team (engineering and product management) realized that its time to relook at how the automation is working and stopped looking at it as only one groups’ responsibility. Everyone started pitching in towards automation and thus started their journey.

This blog is to discuss, what does it take for successful test automation journey. Classic question of Mindset or Science often comes in. We will come to the conclusion soon enough.

Number of tools available in the market for test automation

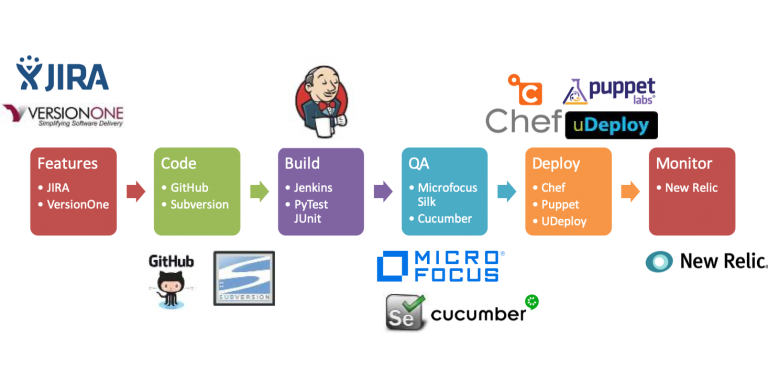

There are number of tools that make the DevOps chain successful. As the number of options are expanding, choices and combinations continue to explode for the teams.

Decision that management struggle is on the analysis paralysis. Rather than spending more time on finding the best possible option, mindset of experimentation is critical here. There are many stories in the automation world, where one tool has worked very well in the database side of the world and another in the UI space.

Just like we look at microservices and choose the technology that works best for the business problem, the test automation also needs to expand their plethora of choices and look at the technical skill sets or proximity of skill sets that are available in the team and choose from the set of options and continue to experiment.

Such as approach provides two-pronged benefit

- Faster code coverage and early feedback on the code that’s deployed in production

- Ability for the team to decide and create an environment of experimentation

An example set of tools that are available in the market to manage the test automation are here.

Ref: Medium

What are the skills required and expected from Testing specialization?

When the entire team starts pitching on test automation as well as overall health of the product, what translates as the role of the traditional Engineering Quality Division in agile teams?

This is an extremely important step that leadership needs to solve prior to providing wings under the team. The sense of loss of ownership on skill set and being central to the decision of Go-NoGo has been one that needs to be unlearnt before these new skills can be learnt.

Thinking beyond pass or fail towards Intimate Business Understanding

Central premise of test automation needs to move beyond number of tests that have turned pass or Fail and instead moving toward Intimate business workflows

What are the confirmation parameters that are critical to automation end to end along with the functionalities that are most often used.

A matrix of criticality of the functionality by the frequency of usage need to be used to prioritize the test automation in the top of the test pyramid. Focus on number of cases or pass percentage towards value of the test automation scripts and how close to the test automation mimic to the actual day in life of the user brings a mammoth difference in the confidence in the test automation suite.

Early and thorough testing and Mentorship over conflict

Role of Quality Engineering teams can be focused towards early and thorough testing and that can be achieved only by automation through the devOps cycle. It cannot be thought of in a siloed manner.

When we think of testing and quality engineering, our concepts shouldn’t be narrowed only to the confines of the system testing or end to end testing stages. It should be looked at the system as a whole. If you refer to this link, you can understand systems thinking better.

When I speak about Technical agility with teams, I often get a question, who is responsible to improve tech agility metrics. This is an area that has not been completely well defined. It is my belief that the Quality Engineering teams are better placed to move the needle as far as Tech Agility is concerned.

This enables Quality Engineering to move towards mentorship for the product rather than being a gate keeper of quality.

It is no longer about conflict over whether there is value in automation and creating a sense of ownership of the Tech agility. So what are the key metrics that one needs to look out for?

Quality Automation Governance Dashboards

There are 4 parameters that each team needs to measure themselves. Actual metric that can be used can be based on the journey itself.

- Early Feedback: Feedback can be looked at in various forms – from user, from UAT tests, from System tests, from code completion, from DIT deployment. This is a measure on both actual time it takes to get feedback and get historical progression.

One of the teams that I work, found that they take 45 min to complete one check in and find that developers check in code approximately once for every story. This is an area that led teams to relook at their Definition of Done and working agreements to improve for the product.

- Code Coverage: Code test coverage from unit, functional and regression tests through the test pyramid

An example above on Planner provides reference on how to use these metrics to enable the level of investment required by the team as a whole. The leadership also continuously provided the needed support for the team to increase the coverage.

- ROI on automation: How often are the automation scripts run and how long does it take to deploy these automation scripts. More often if scripts are run, less possibility for defects to escape.

For a middleware product, there would always be code coverage metrics that were constantly shown to justify the automation investment. When we started sharing the ROI on how often the automation scripts were run, there was a paradigm shift in mindset started. In this team’s case, the automation was run once every release (3 months) and it took approximately a week for the automation environment to be set. This was almost 3.5 years back. Since then, the middleware product have improved their automation to run on a weekly basis with minimal manual effort.

- System Alerts/Monitoring: Number of alerts or monitoring that have been setup to ensure the error conditions, storage can be proactively captured.

Test automation – mindset or science?

As is true in most scientific discoveries, truth lies somewhere in the middle. Analysis of the right set of testing tools, creation of environments and spawning the right environments certainly stay in the side of engineering or science. While motivation to keep adding one additional script, identifying the critical path, bringing collaborative mindset within all the team members is firmly in the side of mindset.

Can the scientific analysis and right mindset come together to increase the Test automation? There lies the Billion Dollar Business Agility question.