To ascertain delivery assurance in software projects, it may be useful to ask the following questions:

- Are we working on the right thing?

- Are we doing it right?

A team and/or an organization that answers these questions convincingly shall feel confident in their progress.

- “Are we working on the right thing?” might include:

- Problem Definition

- Adaptive Planning

- Scope Management

- Change Management

- RAID (Risk, Assumption, Issue, Dependency)

- NFR (Non-Functional Requirement)

- “Are we doing it right?” includes:

- Practices (Safety nets)

- Quality Assurance

- Review and Feedback

- Governance

- Metrics Reporting

- Communication Plan

On many occasions, even though we all agree that we want to follow all the practices and processes, we might have to be pragmatic in customizing the practices/processes without compromising the delivery assurance.

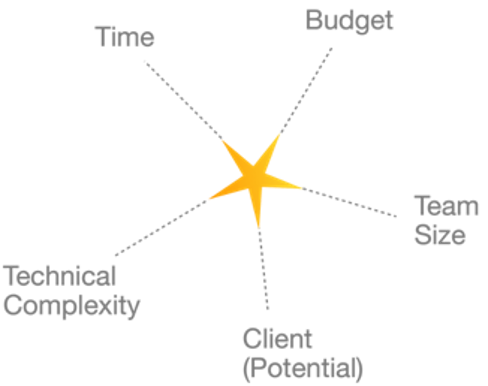

To achieve that, one of the approaches that had helped me in my experience was to categorize the projects using certain complexity factors. They are:

Based on the values we choose for our complexity factors, we may arrive at different sets.

For simplicity, let us consider a small company/business unit. A sample categorization might look like:

| <sample data> | Budget | Time | Client (Potential) | Team Size | Technical Complexity |

| Small | <100K | 2-3 weeks | No visibility on Future work | <5 people | Not much |

| Medium | Between 100K & 500K | 4 to 12 weeks | Slight chance of work | 5-15 people | Some customization |

| Large | >500K | > 3 months | Clear Visibility | 2 or more teams of >10 people each | Research/study to do |

Then, using this above sample categorization, if we arrive at a guideline for the delivery practices/processes, it may look like:

| Large | Medium | Small | |

| Sprints / Iterations | Yes | Yes | No |

| Sprint Length | 2 weeks | 1 week | No |

| Milestones | Mandatory | Preferable | No |

| Communication Plan | Yes | Yes | No |

| Metrics | |||

| Release Burn-up | Yes | Yes | Yes |

| Sprint Burn-down | Yes | Maybe | No |

| SPCR | Yes | Maybe | No |

| Cycle Time | Yes | No | No |

| Velocity Chart | Yes | Maybe | No |

| Defect Turn Around Time | Yes | No | No |

| Spill Over Defects | Yes | No | No |

| Business Metrics | Yes | Yes | Yes |

| Engineering Practices | |||

| Unit Test Coverage | Yes | Preferable | No |

| Functional Automation | Yes | Optional | No |

| Usability Testing | Yes | If the project is UI intensive | No |

| UX Review | Yes | If the project is UI intensive | No |

| Code Review | Yes | Yes | No |

| Performance Test | Yes | Preferable | No |

| NFR Review | Yes | Yes | Yes |

| Continuous Integration | Yes | Preferable | No |

| Continuous Deployment | Yes | Optional | No |

| Staging / Performance Test Environment | Yes | Preferable | No |

| Project Management Tool | Yes | Simple Spreadsheet | Simple Spreadsheet |

| Metrics Tracking Tool | Yes | Simple Spreadsheet | Simple Spreadsheet |

As usual, there are different opinions in the Agile World, in dealing with practices/processes. Here I’d just shared an approach that worked for me.

Best Regards