If you want to further improve the throughput of the Rust quicksort implementation, what are the options you may consider?

Answer

Concurrency may not be an option since the sorting logic is non-blocking.

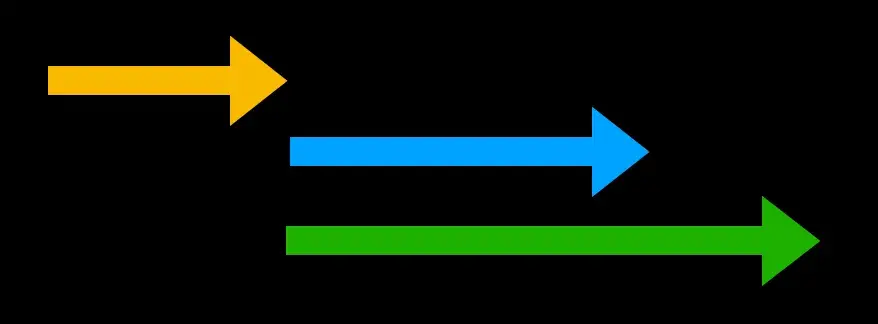

One of the options is parallelism.

We can leverage the number of cores of the target system to improve the throughput further.

The rust slices can be split into regions and can be safely offered to parallel processes.

Rayon is a data-parallel library for Rust that can be explored, where the partition logic can be offered as “tasks” to different CPU cores.

The picture for this Chow is an abstract representation of this idea.